TECH TALK: Life & Death of the Microchip

ERIC’S TECH TALK

ERIC’S TECH TALK

by Eric Austin

Computer Technical Advisor

The pace of technological advancement has a speed limit and we’re about to slam right into it.

The first electronic, programmable, digital computer was designed in 1944 by British telephone engineer Tommy Flowers, while working in London at the Post Office Research Station. Named the Colossus, it was built as part of the Allies’ wartime code-breaking efforts.

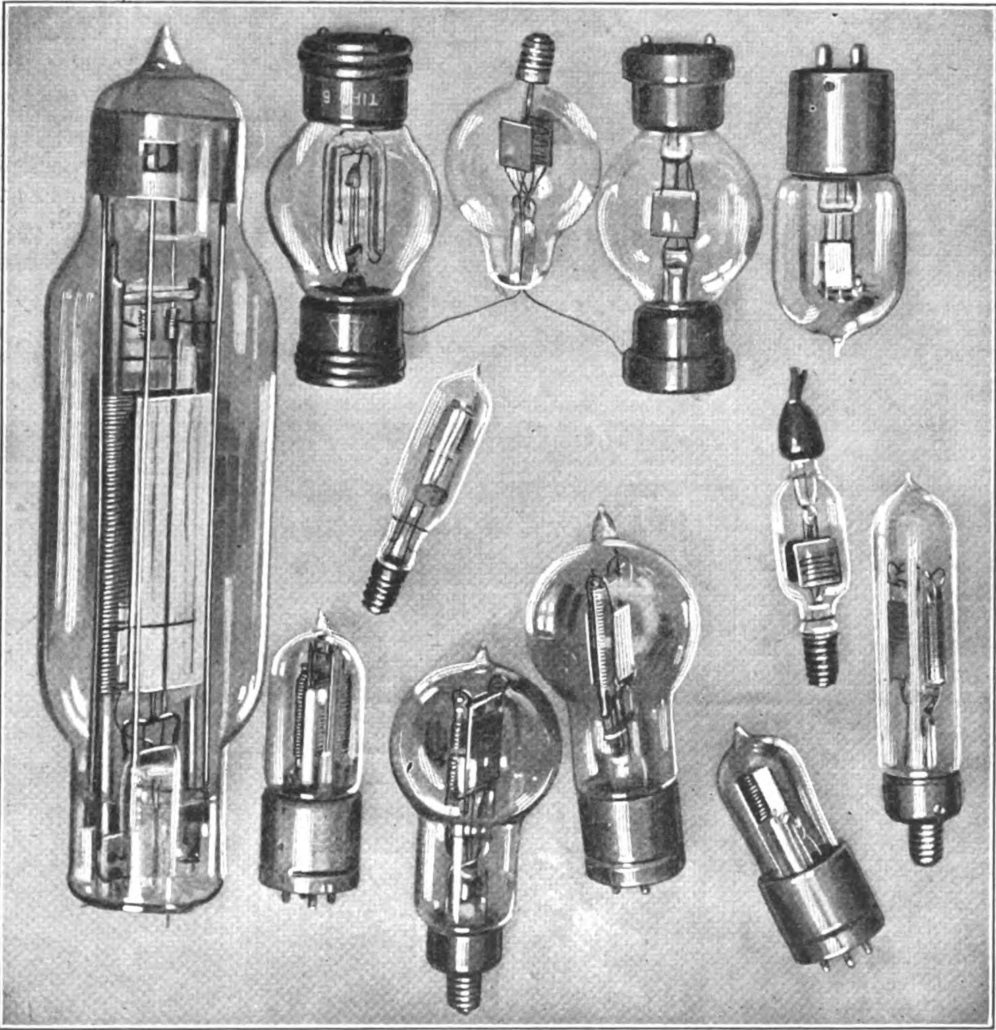

The Colossus didn’t get its name from being easy to carry around. Computers communicate using binary code, with each 0 or 1 represented by a switch that is either open or closed, on or off. In 1944, before the invention of the silicon chip that powers most computers today, this was accomplished using vacuum-tube technology. A vacuum tube is a small, vacuum-sealed, glass chamber which serves as a switch to control the flow of electrons through it. Looking much like a complicated light-bulb, vacuum tubes were difficult to manufacture, bulky and highly fragile.

Engineers were immediately presented with a major problem. The more switches a computer has, the faster it is and the larger the calculations it can handle. But each switch is an individual glass tube, and each must be wired to every other switch on the switchboard. This means that a computer with 2,400 switches, like the Colossus, would need 2,400 individual wires connecting each switch to every other, or a total of almost six million wires. As additional switches are added, the complexity of the connections between components increases exponentially.

This became known as the ‘tyranny of numbers’ problem, and because of it, for the first two decades after the Colossus was introduced, it looked as though computer technology would forever be out of reach of the average consumer.

Then two engineers, working separately in California and Texas, discovered a solution. In 1959, Jack Kilby, working at Texas Instruments, submitted his design for an integrated circuit to the US patent office. A few months later, Robert Noyce, founder of the influential Fairchild Semiconductor research center in Palo Alto, California, submitted his own patent. Although they each approached the problem differently, it was the combination of their ideas that resulted in the microchip we’re familiar with today.

The advantages of this new idea, to print microscopic transistors on a wafer of semi-conducting silicon, were immediately obvious. It was cheap, could be mass produced, and most importantly, it’s performance was scalable: as our miniaturization technology improved, we were able to pack more transistors (switches) onto the same chip of silicon. A chip with a higher number of transistors resulted in a more powerful computer, which allowed us to further refine our fabrication process. This self-fed cycle of progress is what has fueled our technological advancements for the last 60 years.

Gordon Moore, who, along with Robert Noyce, later founded the microchip company Intel, was the first to understand this predictable escalation in computer speed and performance. In a paper he published in 1965, Moore observed that the number of components we could print on an integrated circuit was doubling every year. Ten years later the pace had slowed somewhat and he revised his estimate to doubling every two years. Nicknamed “Moore’s Law,” it’s a prediction that has remained relatively accurate ever since.

This is why every new iphone is faster, smaller, and more powerful than the one from the year before. In 1944, the Colossus was built with 2,400 binary vacuum tubes. Today the chip in your smart phone possesses something in the neighborhood of seven billion transistors. That’s the power of the exponential growth we’ve experienced for more than half a century.

But this trend of rapid progress is about to come to an end. In order to squeeze seven billion components onto a tiny wafer of silicone, we’ve had to make everything really small. Like, incomprehensibly small. Components are only a few nanometers wide, with less than a dozen nanometers between them. For some comparison, a sheet of paper is about 100,000 nanometers thick. We are designing components so small that they will soon be only a few atoms across. At that point electrons begin to bleed from one transistor into another, because of a quantum effect called ‘quantum tunneling,’ and a switch that can’t be reliably turned off is no switch at all.

Experts differ on how soon the average consumer will begin to feel the effects of this limitation, but most predict we have less than a decade to find a solution or the technological progress we’ve been experiencing will grind to a stop.

What technology is likely to replace the silicon chip? That is exactly the question companies like IBM, Intel, and even NASA are racing to answer.

IBM is working on a project that aims to replace silicon transistors with ones made of carbon nanotubes. The change in materials would allow manufacturers to reduce the space between transistors from 14 nanometers to just three, allowing us to cram even more transistors onto a single chip before running into the electron-bleed effect we are hitting with silicon.

Another idea with enormous potential, the quantum computer, was first proposed back in 1968, but has only recently become a reality. Whereas the binary nature of our current digital technology only allows for a switch to be in two distinct positions, on or off, the status of switches in a quantum computer are determined by the superpositional states of a quantum particle, which, because of the weirdness of quantum mechanics, can be in the positions of on, off or both – simultaneously! The information contained in one quantum switch is called a ‘qubit,’ as opposed to the binary ‘bit’ of today’s digital computers.

At their Quantum Artificial Intelligence Laboratory (QuAIL) in Silicon Valley, NASA, in partnership with Google Research and a coalition of 105 colleges and universities, has built the D-Wave 2X, a second-generation, 1,097-qubit quantum computer. Although it’s difficult to do a direct qubit-to-bit comparison because they are so fundamentally different, Google Research has released some data on its performance. They timed how long it takes the D-Wave 2X to do certain high-level calculations and compared the timings with those of a modern, silicon-based computer doing the same calculations. According to their published results, the D-Wave 2X is 100 million times faster than the computer on which you are currently reading this.

Whatever technology eventually replaces the silicon chip, it will be orders of magnitude better, faster and more powerful than what we have today, and it will have an unimaginable impact on the fields of computing, space exploration and artificial intelligence – not to mention the ways in which it will transform our ordinary, everyday lives.

Welcome to the beginning of the computer age, all over again.

Responsible journalism is hard work!

It is also expensive!

If you enjoy reading The Town Line and the good news we bring you each week, would you consider a donation to help us continue the work we’re doing?

The Town Line is a 501(c)(3) nonprofit private foundation, and all donations are tax deductible under the Internal Revenue Service code.

To help, please visit our online donation page or mail a check payable to The Town Line, PO Box 89, South China, ME 04358. Your contribution is appreciated!

Leave a Reply

Want to join the discussion?Feel free to contribute!